By Laine Lovgren, 2025 HEDCO Institute Undergraduate Scholar, University of Oregon

The HEDCO Institute Undergraduate Scholars Program gives future educators and researchers a hands-on look at research and knowledge mobilization while they are undergraduates at the University of Oregon. Since launching in 2023, the program has hosted three cohorts of undergrads for a year of immersive training in research synthesis and knowledge mobilization—skills that help bring evidence-based practices into K-12 education.

In this post, we’re spotlighting the work from our 2024 and 2025 scholars, starting with an article from Laine Lovgren, a Child Behavioral Health student at UO Portland, who helped HEDCO Institute researchers train an AI tool for research screening. Then, check out the project from our 2024 scholars, who developed a user-friendly brief to accompany their peer-reviewed publication, which highlight gaps in education research.

How can AI support research synthesis?

A student’s perspective

What is research synthesis?

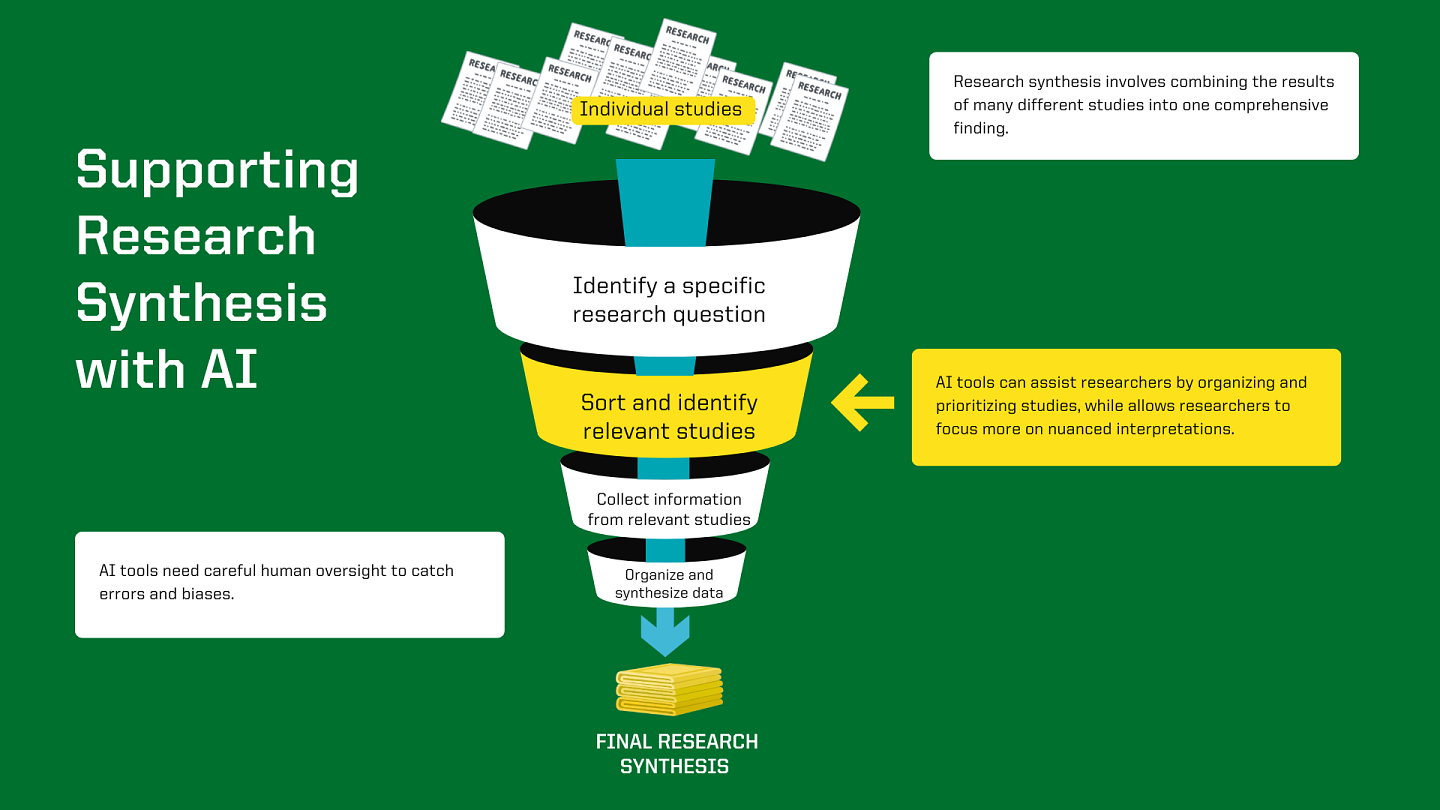

Research synthesis involves combining the results of many different studies into one comprehensive finding. This helps researchers get a bigger picture, so they can better inform policymaking, education, and healthcare systems to serve public needs.

This sounds relatively easy, right? Just pool all the data and deliver it to decision-makers!

But in practice, research synthesis becomes a tedious process that requires a lot of time and resources to complete, especially to do it efficiently and accurately.

From figuring out what information to capture and how to search for it, to manually filtering studies to decide which studies are worth keeping or dropping, this process can take a year or more.

AI in research synthesis

DistillerSR ‘s AI uses Natural Language Processing (NLP) AI to assist researchers by cutting down the amount of time spent organizing and filtering studies. DistillerSR’s AI-assisted screening algorithm is not a generative AI like ChatGPT. Rather, NLP’s make sense of human language and use that understanding to create connections between human input and data from studies. This AI tool can be strengthened over time with more human input. This means that AI can help consolidate research in meaningful ways, so decision-makers can use it in policy and practice.

AI can also be used to update Living Systematic Reviews, which is a type of Systematic Review that is updated to include new studies over time. Typically, researchers manually update Living Reviews, which can be time-consuming. AI can streamline this process by identifying studies to be reviewed by researchers that can then be included or excluded from the review. This type of review can be especially important for areas where evidence changes quickly, such as healthcare, climate policy, education, and public safety.

A learner’s experience with study selection

As an Undergraduate Scholar at the HEDCO Institute, I’ve had the opportunity to get hands-on experience (~200 hours) with study selection to help train an AI classifier. This was done by using DistillerSR’s DAISY AI classifier. Another undergraduate scholar and I as well as a PhD level researcher selected “yes,” “no,” or “unclear” to answer questions such as “is this study a systematic review?” for 3,715 studies. From this input, the classifier learned to detect studies that included relevant information for the type of research synthesis that HEDCO is performing (i.e., Depression prevention interventions in K-12 schools). Once the AI has enough responses, the goal is for the classifier to accurately inform researchers to keep or drop studies by answering each question of relevance as “yes” or “no.

Through this experience, I learned just how much variation exists in the way research studies use language and describe methods. This is even true for studies that seem to be studying the same topic as one another. These differences can make it difficult to compile research into a single review.

For example, one study might define the threshold for a depression diagnosis differently than another. The interventions used for those two populations now have different implications: one might be effective for at-risk or subclinical depression, while another might work better for people with a full diagnosis. Including both in a review focused only on at-risk populations could result in overrepresentation and unintentionally distort the conclusions.

This overrepresentation could make an intervention appear more effective than it truly is. As a result, there is a possibility that depression prevention programs are used in settings like K–12 schools even if they aren’t as effective for students as the review suggests.

This experience showed me how important thoughtful decision-making is in creating clear definitions and terms in research synthesis. It also demonstrates that while AI tools can assist in screening, a trained human still needs to be part of the process.

Challenges with AI-assisted research synthesis

While AI tools can help with scanning documents at lightning speed, consolidating information, and learning from human input, there are some caveats. These tools, in their current state, can create biased results—they are learning from humans, after all.

The accuracy of the AI classifier can depend on subjectivity between researchers based on their knowledge and lived experience, which can influence how it is trained. This can become an issue if there are differences in how people interpret definitions of terms. One person training an AI classifier might consider a study exploring “math anxiety” worth keeping, while another person may not.

This is why it is important for researchers to use best practices when using AI-assisted research synthesis. The Open Science Framework has created a guide to use for best practices in AI-assisted research. Guidelines listed include transparency around how it is used and its potential limitations, upholding legal practices, and staying up to date.

Additionally, there are best practices that researchers use when performing research synthesis to ensure that the processes are rigorous and transparent. Tools such as the CASP Checklist and PRESS Guidelines can be used to critically appraise studies and establish their quality and reproducibility. One example of how the CASP and PRESS are used includes checking if researchers established a detailed study protocol to enhance transparency and reproducibility, which improves trust and better decision-making.

A widely popular checklist is PRISMA (Preferred Reporting Items for Systematic Reviews and Meta Analyses). This is a standardized 27-item list, and it is oftentimes used for systematic reviews and meta-analyses to mitigate bias and improve transparency.

Conclusion

AI tools can enhance the efficiency and responsivity of research synthesis to an ever-evolving hub of evidence. They can assist researchers by organizing and prioritizing studies, while allowing researchers to focus more on more nuanced interpretations.

At the same time, AI needs careful human oversight to catch errors and biases. When AI is paired with this oversight, it has the potential to streamline research synthesis so that evidence can get into the hands of those who need it most.

Read more

How could GenAI support student learning if applied thoughtfully?

Are we ready for AI-assisted decision making in education?

HEDCO Institute article 27 - January 29, 2025